How to configure microservices with docker-compose?

We know docker, we know docker-compose, we know microservice, and also we know API, CQRS, gRPC, ELK, MongoDB, etc. But do we know all of these together? :)

We will discuss configuring a microservice project with multiple technologies, frameworks, patterns, etc. in docker-compose.

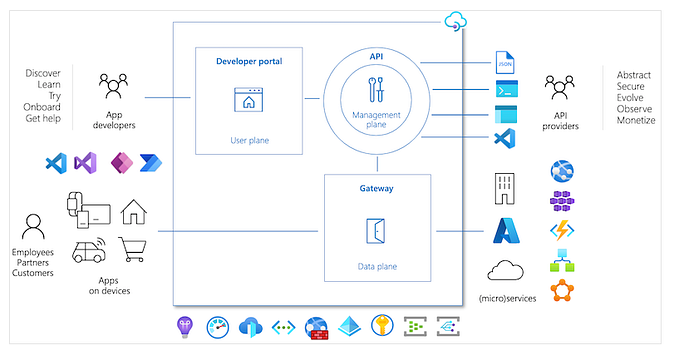

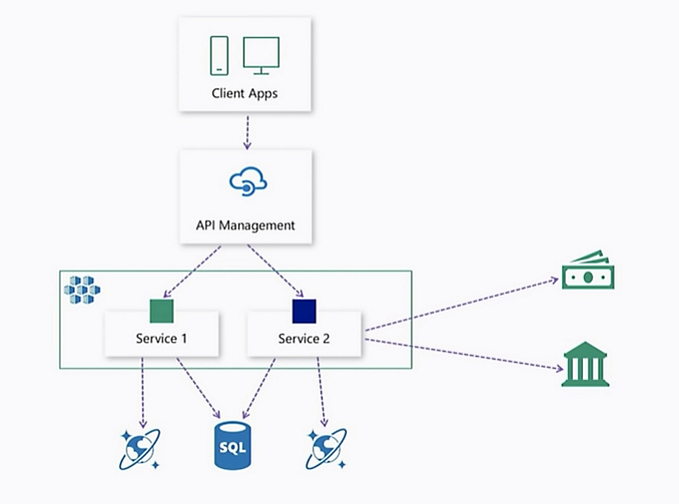

Consider a microservice application that contains more than one service, a gateway responsible for the management of these services, a frontend for use on the client side, an identity provider, tracing, logging, and different data management tools altogether. It’s good, but how will we proceed to the point of all of them standing up with a single command and communicating with each other in a healthy way?

Since we cannot set up and manage each application by creating separate containers, let’s consider how to configure complex applications with a docker-compose tool that allows them to be defined and run with a single configuration file, taking all the requirements they need, in an example microservice application.

Our microservice application will basically be an e-commerce application exemplified by Microsoft. You can access Microsoft’s sample here.

Catalog.API for product management, Basket.API for basket management, Discount.API for discount management, developed with REST API written in our microservice application .NET6 framework; It consists of Discount.Grpc was created with gRPC technology to quickly query and process discounts defined on products and Ordering.API services are written with the OneFrame API template to sample different templates within the project and develop fast APIs.

OneFrame’s quality standards were used by using the OneFrame MVC template on the frontend.

While Ocelot was used on the ApiGateway side, the tracing side was transferred to Jeager, and the log management was transferred to ELK.

Products are kept and managed on MongoDb, discounts on PostgreSql, orders on MSSQL, and basket on Redis. Moreover, EF Core and Dapper ORM tools are sampled in different services.

Basket and order management Masstransit message bus is configured with RabbitMQ.

Since the identity server has switched to the paid version, an alternative has been searched and KeyCloak has assumed the identity provider role in this microservice application.

A platform has been created, which includes many different patterns such as CQRS pattern, and implements clean code and solid principles.

Let’s add an image from Solution so that something comes to life in our minds :)

Well, we talked about a lot of technology and framework. We saw that different services have different requirements, now let’s talk about how we can set them up with docker-compose. Let’s talk about the configurations of individual services first.

It can be used by separating basic and detailed definitions with names such as docker-compose.<environment_name>.yml besides the docker-compose.yml file to manage the configuration more accurately, to increase readability, and even to separate development, test, and production environments. While we keep the basic definitions in the docker-compose.yml file, we have configured the options of the services or related images in detail in the docker-compose.override.yml file. In the example codes, I separated these two files with comment lines so that we can provide a single image.

Let’s exemplify the basic configuration through the catalog by explaining yaml files;

Basically, our CatalogAPI service requires MongoDb. Here, in the docker-compose.yml file, we have specified the image name and the network related to the catalogdb definition. (All services will communicate under the same network.) Likewise, in the catalog.api service definition, we have specified the directory where the dockerfile is located and the image that will be built and the image to be created from the build. Again, volume and network definitions to be used here are made. On the Override side, there are the steps where we define the details of these services. These consist of options that contain the configurations of the related requirements such as container names, port, volume, environment of the services we want to stand up. These options can increase or decrease according to the demands of each service.

#docker-compose.yml

services:

catalogdb:

image: mongo

networks:

- backend

catalog.api:

image: ${DOCKER_REGISTRY-}catalogapi

build:

context: .

dockerfile: src/Services/Catalog/Catalog.API/Dockerfile

networks:

- backend

volumes:

mongo_data:

networks:

backend:

#docker-compose.override.yml

services:

catalogdb:

container_name: catalogdb

restart: always

ports:

- "27017:27017"

volumes:

- mongo_data:/data/db

catalog.api:

container_name: catalog.api

environment:

- ASPNETCORE_ENVIRONMENT=Development

- "DatabaseSettings:ConnectionString=mongodb://catalogdb:27017"

- "Logging:Providers:NetworkTarget:Host=tcp://logstash:50000" #(Definition of environment to log on Logstash)

- "Tracing:Jaeger:http-thrift=http://jaeger:14268/api/traces" #(Added environment definition for tracing tracking with Jaeger)

depends_on:

- catalogdb

ports:

- "8000:80"In our microservice project, ElasticSearch part is the logging mechanism that works in every service. ELK is used for management of logging. For the configuration of ELK services, let’s create our docker-compose and docker-compose.override yaml files as follows.

NOTE: (…) indicates that it is a continuation of the previous yaml file content. I write separate yaml files just to specify the relevant services and volumes. You can merge last.

For ELK installation, there are some configurations under the .elk folder in the root directory. You can access this folder structure via this github link. We have configured variables such as ELASTIC_VERSION, ELASTIC_PASSWORD to be accessed under the environments in this directory. We have completed the configurations to create elasticsearch, logstash, and kibana services.

#docker-compose.yml

services:

...

elksetup:

build:

context: .elk/setup/

args:

ELASTIC_VERSION: ${ELASTIC_VERSION}

networks:

- backend

elasticsearch:

build:

context: .elk/elasticsearch/

args:

ELASTIC_VERSION: ${ELASTIC_VERSION}

networks:

- backend

logstash:

build:

context: .elk/logstash/

args:

ELASTIC_VERSION: ${ELASTIC_VERSION}

networks:

- backend

kibana:

build:

context: .elk/kibana/

args:

ELASTIC_VERSION: ${ELASTIC_VERSION}

networks:

- backend

volumes:

...

setup:

elasticsearch:

#docker-compose.override.yml

services:

...

elksetup:

init: true

container_name: elksetup

volumes:

- ./.elk/setup/state:/state:Z

environment:

ELASTIC_PASSWORD: ${ELASTIC_PASSWORD:-}

LOGSTASH_INTERNAL_PASSWORD: ${LOGSTASH_INTERNAL_PASSWORD:-}

KIBANA_SYSTEM_PASSWORD: ${KIBANA_SYSTEM_PASSWORD:-}

depends_on:

- elasticsearch

elasticsearch:

container_name: elasticsearch

volumes:

- ./.elk/elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml:ro,z

- ./.elk/elasticsearch/data:/usr/share/elasticsearch/data:z

ports:

- "9200:9200"

- "9300:9300"

environment:

ES_JAVA_OPTS: -Xms512m -Xmx512m

ELASTIC_PASSWORD: ${ELASTIC_PASSWORD:-}

discovery.type: single-node

logstash:

container_name: logstash

volumes:

- ./.elk/logstash/config/logstash.yml:/usr/share/logstash/config/logstash.yml:ro,Z

- ./.elk/logstash/pipeline:/usr/share/logstash/pipeline:ro,Z

ports:

- "5044:5044"

- "50000:50000/tcp"

- "50000:50000/udp"

- "9600:9600"

environment:

LS_JAVA_OPTS: -Xms256m -Xmx256m

LOGSTASH_INTERNAL_PASSWORD: ${LOGSTASH_INTERNAL_PASSWORD:-}

depends_on:

- elasticsearch

kibana:

container_name: kibana

volumes:

- ./.elk/kibana/config/kibana.yml:/usr/share/kibana/config/kibana.yml:ro,Z

ports:

- "5601:5601"

environment:

KIBANA_SYSTEM_PASSWORD: ${KIBANA_SYSTEM_PASSWORD:-}

depends_on:

- elasticsearchLet’s talk about the configurations for the Discount.API service, where the discounts defined for the products are managed. Here, besides the Discount.API service responsible for the CRUD operations of the products, we will configure the Discount.Grpc service together, which will query if there is a discount when adding the products to the basket and apply this discount to the basket. As I mentioned before, the discount data is kept on PostgreSql. For this reason, we will configure PostgreSql images for these services to work and PgAdmin images as services for data management. After specifying the relevant images in the docker-compose file, we define the necessary configurations to create the containers in the override file. Here, in the environment step of the discountdb service, we define the user password information so that we can connect to the db and db. Likewise, the environment steps of discount.api and discount.grpc services will crush the definitions specified in the appsettings file and will raise the container with the information in this configuration.

#docker-compose.yml

services:

...

discountdb:

image: postgres:14

networks:

- backend

pgadmin:

image: dpage/pgadmin4

networks:

- backend

discount.api:

image: ${DOCKER_REGISTRY-}discountapi

build:

context: .

dockerfile: src/Services/Discount/Discount.API/Dockerfile

networks:

- backend

discount.grpc:

image: ${DOCKER_REGISTRY-}discountgrpc

build:

context: .

dockerfile: src/Services/Discount/Discount.Grpc/Dockerfile

networks:

- backend

volumes:

...

postgres_data:

pgadmin_data:

#docker-compose.override.yml

services:

...

discountdb:

container_name: discountdb

environment:

- POSTGRES_USER=<YOUR_USER>

- POSTGRES_PASSWORD=<YOUR_PASSWORD>

- POSTGRES_DB=DiscountDb

restart: always

ports:

- "5432:5432"

volumes:

- postgres_data:/var/lib/postgresql/data/

pgadmin:

container_name: pgadmin

environment:

- PGADMIN_DEFAULT_EMAIL=<YOUR_EMAIL>

- PGADMIN_DEFAULT_PASSWORD=<YOUR_PASSWORD>

restart: always

ports:

- "5050:80"

volumes:

- pgadmin_data:/root/.pgadmin

discount.api:

container_name: discount.api

environment:

- ASPNETCORE_ENVIRONMENT=Development

- "DatabaseSettings:ConnectionString=Server=discountdb;Port=5432;Database=DiscountDb;User Id=<YOUR_USERID>;Password=<YOUR_PASSWORD>;"

- "Logging:Providers:NetworkTarget:Host=tcp://logstash:50000"

- "Tracing:Jaeger:http-thrift=http://jaeger:14268/api/traces"

depends_on:

- discountdb

ports:

- "8002:80"

discount.grpc:

container_name: discount.grpc

environment:

- ASPNETCORE_ENVIRONMENT=Development

- "DatabaseSettings:ConnectionString=Server=discountdb;Port=5432;Database=DiscountDb;User Id=<YOUR_USERID>;Password=<YOUR_PASSWORD>;"

- "Logging:Providers:NetworkTarget:Host=tcp://logstash:50000"

- "Tracing:Jaeger:http-thrift=http://jaeger:14268/api/traces"

depends_on:

- discountdb

ports:

- "8003:80"Let’s come to the Basket.API service; In this service, we set up the structure of adding products to the basket, deleting products from the basket and checkout, and queuing the product in the basket on rabbitmq. Since the Basket.API service also communicates with the Discount.Grpc service, we must specify all the requirements in the configurations here and make sure that the services that are in need stand up.

#docker-compose.yml

services:

...

rabbitmq:

image: rabbitmq:3-management-alpine

networks:

- backend

basketdb:

image: redis:alpine

networks:

- backend

basket.api:

image: ${DOCKER_REGISTRY-}basketapi

build:

context: .

dockerfile: src/Services/Basket/Basket.API/Dockerfile

networks:

- backend

#docker-compose.override.yml

services:

...

rabbitmq:

container_name: rabbitmq

restart: always

ports:

- "5672:5672"

- "15672:15672"

basketdb:

container_name: basketdb

restart: always

ports:

- "6379:6379"

basket.api:

container_name: basket.api

environment:

- ASPNETCORE_ENVIRONMENT=Development

- "CacheSettings:ConnectionString=basketdb:6379"

- "GrpcSettings:DiscountUrl=http://discount.grpc"

- ServiceBus:Host=amqp://rabbitmq:5672

- ServiceBus:Username=<YOUR_USERNAME>

- ServiceBus:Password=<YOUR_PASSWORD>

- ServiceBus:RequestHeartbeat=5

- "Logging:Providers:NetworkTarget:Host=tcp://logstash:50000"

- "Tracing:Jaeger:http-thrift=http://jaeger:14268/api/traces"

depends_on:

- basketdb

- rabbitmq

- discount.grpc

ports:

- "8001:80"The Ordering.API service is our service responsible for orders. This service carries out the step of creating an order by reading the products sent to rabbitmq with checkout on the basket. In this service, our data is kept on mssql. Therefore, MSSQL should be installed as a container and its dependencies should be specified in the Ordering.API configuration.

#docker-compose.yml

services:

...

orderdb:

image: mcr.microsoft.com/mssql/server:2022-latest

networks:

- backend

ordering.api:

image: ${DOCKER_REGISTRY-}orderingapi

build:

context: .

dockerfile: src/Services/Ordering/Presentation/Ordering.WebAPI/Dockerfile

networks:

- backend

#docker-compose.override.yml

services:

...

orderdb:

container_name: orderdb

environment:

- MSSQL_SA_PASSWORD=<YOUR_PASSWORD>

- ACCEPT_EULA=Y

restart: always

ports:

- "1433:1433"

ordering.api:

container_name: ordering.api

environment:

- ASPNETCORE_ENVIRONMENT=Development

- "Data:MainDbContext:ConnectionString=Data Source=orderdb;Initial Catalog=orderingDb;Application Name=OneFrameMicroServices;User Id=<YOUR_USERID>;Password=<YOUR_PASSWORD>"

- ServiceBus:Host=amqp://rabbitmq:5672

- ServiceBus:Username=guest

- ServiceBus:Password=guest

- ServiceBus:RequestHeartbeat=5

- "Logging:Providers:NetworkTarget:Host=tcp://logstash:50000"

- "Tracing:Jaeger:http-thrift=http://jaeger:14268/api/traces"

depends_on:

- orderdb

- rabbitmq

ports:

- "8004:80"We need an API management tool to deploy all of our services that we have created with the client. Ocelot assumed the role of ApiGateway in our project. We can also create the Ocelot configuration as follows.

#docker-compose.yml

services:

...

ocelotapigw:

image: ${DOCKER_REGISTRY-}ocelotapigw

build:

context: .

dockerfile: src/ApiGateways/OcelotApiGw/Dockerfile

networks:

- backend

#docker-compose.override.yml

services:

...

ocelotapigw:

container_name: ocelotapigw

environment:

- ASPNETCORE_ENVIRONMENT=Development

- "Logging:Providers:NetworkTarget:Host=tcp://logstash:50000"

depends_on:

- catalog.api

- basket.api

- discount.api

- ordering.api

ports:

- "8010:80"KeyCloak is used as identity provider for authentication and authorization in our Microservice project. Let’s specify the keycloak configuration and use it in the frontend project.

#docker-compose.yml

services:

...

keycloak:

image: quay.io/keycloak/keycloak:18.0.1

networks:

- backend

#docker-compose.override.yml

services:

...

keycloak:

container_name: keycloak

environment:

- KEYCLOAK_VERSION=18.0.1

- KEYCLOAK_ADMIN=<YOUR_USER>

- KEYCLOAK_ADMIN_PASSWORD=<YOUR_PASSWORD>

- KC_HEALTH_ENABLED=true # If enabled, health checks are availabl at the '/health', 'healthready' and '/health/live'endpoints.

- KC_METRICS_ENABLED=true # If enabled, metrics are available atthe '/ metrics' endpoint.

command:

- start-dev

- '-Dkeycloak.migration.action=import'

- '-Dkeycloak.migration.provider=dir'

- '-Dkeycloak.migration.dir=/data/import/'

- '-Dkeycloak.migration.strategy=OVERWRITE_EXISTING'

ports:

- "1000:8080"

- "2443:8443"

restart: unless-stopped

volumes:

- ./.keycloak:/data/import/Our frontend project, which we created with OneFrame MVC Template, also constitutes the client side of this shopping microservice application. Here we can list the products, add them to the cart and as a result, we can create an order. By streaming all services through the Frontend project, we can follow the logs in the flow through Kibana. Let’s also add the MVC configuration.

#docker-compose.yml

services:

...

frontends.mvc:

image: ${DOCKER_REGISTRY-}frontendsmvc

build:

context: .

dockerfile: src/Frontends/Mvc/Presentation/Frontends.Mvc/Dockerfile

networks:

- backend

#docker-compose.override.yml

services:

...

frontends.mvc:

container_name: frontends.mvc

environment:

- ASPNETCORE_ENVIRONMENT=Development

- ASPNETCORE_URLS=https://+:443;http://+:80

- "Logging:Providers:NetworkTarget:Host=tcp://logstash:50000"

- "ApiSettings:CatalogServiceMicroserviceClient:Uri=http://ocelotapigw"

- "ApiSettings:BasketApi:Uri=http://basket.api"

- "ApiSettings:OrderingApi:Uri=http://ordering.api"

- "ApiSettings:OcelotGw:Uri=http://ocelotapigw"

- "Tracing:Jaeger:http-thrift=http://jaeger:14268/api/traces"

depends_on:

- catalog.api

- basket.api

- discount.grpc

- discount.api

- ordering.api

- ocelotapigw

ports:

- "3000:443"

- "3001:80"Tracing plays an important role in microservice projects in order to keep track of incoming requests, replies, errors or bottlenecks to all our services. We also provided jeager integration as tracing to our services. Let’s add the docker-compose configuration here.

#docker-compose.yml

services:

...

jaeger:

image: jaegertracing/all-in-one

networks:

- backend

#docker-compose.override.yml

services:

...

jaeger:

container_name: jaeger

ports:

- "6831:6831/udp"

- "14268:14268"

- "16686:16686"I wanted to exemplify how we can configure different technologies used in a microservice project together. You can split all yaml files into docker-compose.yml and docker-compose.override.yml and combine their configurations. There may have been a few differences from the structure sampled by Microsoft, but these yaml files will be helpful in configurations when you pull out the projects step by step. Or we went over how docker-compose works in a basic microservice project.

Thank you for reading